Tuesday, December 29, 2009

CheckPoint VPN-1 SecureClient on Snow Leopard

I took it upon myself to modify the package and am providing it here. Feel free to use it at your own risk. You can verify the authenticity of the file by running the following command to get its checksum:

cksum SecureClient-VPN-1.zip

You should get the following output:

3505974925 22321216 SecureClient-VPN-1.zip

Sunday, December 20, 2009

Boot Camp x64 is Unsupported on this Computer Model

Tuesday, December 15, 2009

OSX and /etc/resolv.conf

/private/etc/resolv.conf is itself a symlink to /var/run/resolv.conf which is the file that is auto-generated. I ended up discovering that after looking at the /private/etc/resolv.conf file in my oldest Time Machine backup. That'll learn me.

Tuesday, November 10, 2009

Accessing the KDE Wallet from the Cmdline

I didn't have any luck finding a perl interface to the KDE wallet. However, thanks to the good folks at #kde on freenode, I found that the KDE wallet - and lots of other applications as well - expose their interfaces over D-Bus. This was the first time I'd dealt with D-Bus so it took some getting used to but I figured out how to read my kmail password from my KDE wallet. KDE comes with the handy qdbus program that allows command-line testing of the D-Bus interface.

What follows are step-by-step instructions on how to use qdbus to open your KDE wallet and read your kmail password. I'll incorporate this into my one-way sync experiment using the Net::DBus perl module but I wanted to put this out there in case someone else was looking at that.

Introduction to qdbus

A quick intro to qdbus before we get started so you can explore other options instead of just kwalletd:

The following command shows all applications exposing a DBus interface:

$ qdbus

:1.50

org.gtk.vfs.Daemon

:1.51

:1.52

:1.54

org.kde.kwalletd

:1.56

org.kde.printer-applet-3206

:1.57

net.update-notifier-kde-3203

:1.58

The numbers and strings refer to applications, however since most applications expose a recognizable string it's common to use just the strings and ignore the numbers.

The following command lists all the DBus paths exposed by the kwalletd application:

$ qdbus org.kde.kwalletd

/

/MainApplication

/modules

/modules/kwalletd

The /MainApplication path is mainly used when you want to interact with the application itself and you'll find many applications that expose a /MainApplication path. I haven't explored this much but it looks like it should be interesting.

With that introduction to qdbus you should have enough to explore further on your own.

Getting a Password from a KDE Wallet

The following steps will open your default KDE wallet and get your kmail password. Each step will have an explanation, the command issued and the output of that command.

We will use the /modules/kwalletd path in the DBus interface for org.kde.kwalletd for all our password-getting needs. You can get a list of all the methods and signals exposed in the /modules/kwalletd path by using the following command:

$ qdbus org.kde.kwalletd /modules/kwalletd

method bool org.kde.KWallet.isOpen(QString wallet)

method bool org.kde.KWallet.isOpen(int handle)

method bool org.kde.KWallet.keyDoesNotExist(QString wallet, QString folder, QString key)

method QString org.kde.KWallet.localWallet()

method QString org.kde.KWallet.networkWallet()

method int org.kde.KWallet.open(QString wallet, qlonglong wId, QString appid)

method int org.kde.KWallet.openAsync(QString wallet, qlonglong wId, QString appid, bool handleSession)

method int org.kde.KWallet.openPath(QString path, qlonglong wId, QString appid)

Formatting's a bit messed up going forward. Not sure why :-(

However, let's get to work obtaining the password. First, we will open the default KDE wallet - called kdewallet. We will call the org.kde.KWallet.open method which expects a wallet name string, what appears to be a wallet id (similar to a file handle it seems) and finally an application id string. We will use "kdewallet" as the wallet name since that's the name of the default wallet in KDE. We don't know the value of the wallet id so we'll just specify 0. The application id is interesting because KDE wallet prompts the currently logged in user with the application id of any applications that call the org.kde.KWallet.open method which we're abbreviating to just open since that uniquely identifies it in the method list for the /modules/kwalletd path. Specifying a meaningful id here goes a long way to helping the user click on "Allow", "Allow Once" or "Allow Never". With all that in mind, let's use the following command:

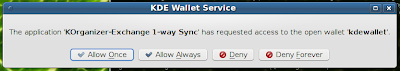

$ qdbus org.kde.kwalletd /modules/kwalletd org.kde.KWallet.open kdewallet 0 "KOrganizer-Exchange 1-way Sync"

470467109

This results in popping up a dialog box like so:

For the purpose of these experiments, I chose "Allow Once". Once I've allowed it, the qdbus call returns a wallet id - similar to a file handle - that we'll use in all our other method calls. I did see that if I waited too long the dialog box remains visible but qdbus times out. However, the next time you make the org.kde.KWallet.open call it returns a valid wallet id without prompting which means the permission grant is persistent. I'll have to deal with the timeouts in my perl code somehow. The next step is to see what's stored in my wallet. This isn't strictly necessary if you already know what you want but serves to walk through my own discovery process. Notice I'm passing in the newly given wallet id as well as the full application id as I sent earlier.

$ qdbus org.kde.kwalletd /modules/kwalletd folderList 470467109 "KOrganizer-Exchange 1-way Sync"

AdobeAIR

Amarok

Form Data

Network Management Passwords

bilbo

kblogger

kmail

mailtransports

Let's list the contents of the kmail folder:

$ qdbus org.kde.kwalletd /modules/kwalletd entryList 470467109 kmail "KOrganizer-Exchange 1-way Sync"

account-242017858

account-990222852

I know the account-242017858 account is the one I need the password from because the other account is older. So let's see how to retrieve that password:

$ qdbus org.kde.kwalletd /modules/kwalletd readPasswordList 470467109 kmail account-242017858 "KOrganizer-Exchange 1-way Sync"

account-242017858: [the password here]

There you go folks! That's all it takes. Please let me know if you found this helpful.

Monday, November 9, 2009

Mythbuntu 9.10 Diskless Frontend

Saturday, November 7, 2009

Seagate FreeAgent USB Drives and Linux

Lately, however, I had to work with a FreeAgent drive again and this time I found this solution to the problem by trolav that uses the power of udev and sysfs to keep the drive working whether or not it's idle. While the solution is posted on an Ubuntu forum, it works well on CentOS 5 as well.

Friday, October 30, 2009

Determining 64-bitness of your CPU

If you see any output from the following command, you're running a 64-bit capable CPU.

grep ^flags /proc/cpuinfo | grep ' lm 'The following command, if it exists on your system, will tell you the width of your physical and logical CPUs:

lshw -C cpu | grep widthThe lshw command is available natively on my Ubuntu 9.04 system and is available from rpmforge.org for RHEL5 and CentOS5 systems.

Friday, October 9, 2009

More Fun with PostgreSQL Date/Time

I got a number of comments from sasha2048 about the modulo, division and remainder operators for the interval data types in a previous blog entry. After playing with all the suggestions I figured it would be best to devote another blog post to the revised code for the functions and operators. The main quibble sasha2048 had with the functions was their precision - they were only good for intervals expressed in seconds and weren't able to handle more precise intervals e.g. in the millisecond range. Here, then, are the updated functions that have the following features:

- The concept of a modulo operator for double precision numbers where a % b = (a - floor(a/b)*b)

- Updated interval_divide and interval_modulo functions that store the extracted epoch from a timestamp into a double precision variable instead of an integer

- Made all functions immutable and "return null on null input"

- Added a default value for the "precision" argument in the round function - it's now set to 1 second so unless you specify a precision level, all round calls will round an interval to the nearest second.

-- Functions

create function interval_divide (interval, interval) returns double precision as $$

declare

firstEpoch constant double precision := extract(epoch from $1);

secondEpoch constant double precision := extract(epoch from $2);

begin

return firstEpoch / secondEpoch;

end

$$ language plpgsql immutable returns null on null input;

create function double_precision_modulo (double precision, double precision) returns integer as $$

begin

return ($1 - floor($1 / $2) * $2);

end

$$ language plpgsql immutable returns null on null input;

create function interval_modulo (interval, interval) returns interval as $$

declare

firstEpoch constant double precision := extract(epoch from $1);

secondEpoch constant double precision := extract(epoch from $2);

begin

return (firstEpoch % secondEpoch) * '1 second'::interval;

end

$$ language plpgsql immutable returns null on null input;

create function round (interval, interval default '1 second'::interval) returns interval as $$

declare

quantumNumber constant double precision := round($1 / $2);

begin

return $2 * quantumNumber;

end

$$ language plpgsql immutable returns null on null input;

-- Operators

create operator % (

leftarg = double precision,

rightarg = double precision,

procedure = double_precision_modulo

);

create operator / (

leftarg = interval,

rightarg = interval,

procedure = interval_divide

);

create operator % (

leftarg = interval,

rightarg = interval,

procedure = interval_modulo

);

Thursday, October 1, 2009

NFS Error Messages

While looking for a concise guide to NFS error messages I found this guide. It's short and sweet and would make a great cheat-sheet when printed out.

Saturday, September 19, 2009

International Talk Like a Pirate Day

The 5 A's

I'm a pirrate!

More from the Pirate Guys here.

Sunday, August 23, 2009

CentOS 5.3 Upgrade Woes

--> Processing Dependency: /usr/lib/python2.4 for package: gamin-pythonSome searching on Google resulted in this post from April 2009 which indicated a yum clean all would do the trick. All is well now :)

--> Processing Dependency: /usr/lib/python2.4 for package: libxslt-python

--> Processing Dependency: /usr/lib/python2.4 for package: libxml2-python

--> Finished Dependency Resolution

gamin-python-0.1.7-8.el5.i386 from installed has depsolving problems

--> Missing Dependency: /usr/lib/python2.4 is needed by package gamin-python-0.1.7-8.el5.i386 (installed)

libxslt-python-1.1.17-2.el5_2.2.i386 from installed has depsolving problems

--> Missing Dependency: /usr/lib/python2.4 is needed by package libxslt-python-1.1.17-2.el5_2.2.i386 (installed)

libxml2-python-2.6.26-2.1.2.8.i386 from updates has depsolving problems

--> Missing Dependency: /usr/lib/python2.4 is needed by package libxml2-python-2.6.26-2.1.2.8.i386 (updates)

Error: Missing Dependency: /usr/lib/python2.4 is needed by package gamin-python-0.1.7-8.el5.i386 (installed)

Error: Missing Dependency: /usr/lib/python2.4 is needed by package libxslt-python-1.1.17-2.el5_2.2.i386 (installed)

Error: Missing Dependency: /usr/lib/python2.4 is needed by package libxml2-python-2.6.26-2.1.2.8.i386 (updates)

Sunday, August 16, 2009

Partially extracting a tarball

Let's say you have a gzipped tarball that contains all your logs from /var/log with fully qualified paths. One thing to remember is that tar will always remove any leading / from all paths it processes. This means if you ran the tar command as follows:

tar zcf /tmp/logs.tar.gz /var/log

you will have a gzipped tarball containing files such as:

var/log/httpd/access.log

var/log/httpd/error.log

var/log/messages

var/log/dmesg

With this in mind, if you want to extract just your web server log files located in var/log/httpd, you can use the following commandline:

tar zxf /tmp/logs.tar.gz --wildcards 'var/log/httpd/*'

The quotes are necessary so the wildcard doesn't get extrapolated. That command will result in the var/log/httpd directory structure being created in your current dir and you will find that directory populated with the access.log and error.log files.

Tuesday, June 30, 2009

Fun with PostgreSQL and Date/Time

- interval_divide (used to power the / operator for intervals): Divides one interval by another and returns a real. Its purpose is to tell you how many interval2-sized chunks of time exist in interval1.

- interval_modulo (used to power the % operator for intervals): Divides one interval by another and returns the remainder as an interval. Its purpose is to tell you how much time would be left over if you fit in as many interval2 sized chunks of time in interval1. You can also use it to determine whether interval1 can perfectly fit a whole number of interval2-size chunks of time.

- round: Rounds one interval to the nearest value that will fit a whole number of interval2-sized chunks of time.

Code

Here's the code for the functions:

create function interval_divide (interval, interval) returns double precision as $$Here's how to create the appropriate operators using these functions:

declare

firstEpoch constant integer := extract(epoch from $1);

secondEpoch constant integer := extract(epoch from $2);

begin

return firstEpoch::double precision / secondEpoch::double precision;

end

$$ language plpgsql;

create function interval_modulo (interval, interval) returns interval as $$

declare

firstEpoch constant integer := extract(epoch from $1);

secondEpoch constant integer := extract(epoch from $2);

begin

return (firstEpoch % secondEpoch) * '1 second'::interval;

end

$$ language plpgsql;

create function round (interval, interval) returns interval as $$

declare

quantumNumber constant real := round($1 / $2);

begin

return $2 * quantumNumber;

end

$$ language plpgsql;

create operator / (Usage Examples

leftarg = interval,

rightarg = interval,

procedure = interval_divide

);

create operator % (

leftarg = interval,

rightarg = interval,

procedure = interval_modulo

);

=> select '1 hour'::interval / '5 minutes'::interval;

?column?

----------

12

(1 row)

=> select '1 hour'::interval / '7 minutes'::interval;

?column?

----------

8.57143

(1 row)

=> select '1 hour'::interval % '7 minutes'::interval;

?column?

----------

00:04:00

(1 row)

=> select '1 hour'::interval % '5 minutes'::interval;

?column?

----------

00:00:00

(1 row)

=> select round('1 hour'::interval, '7 minutes'::interval);

round

----------

01:03:00

(1 row)

=> select '1 hour 3 minutes'::interval / '7 minutes'::interval;

?column?

----------

9

(1 row)

Tuesday, June 2, 2009

Trac 0.12 brings support for multiple repositories

Wednesday, May 20, 2009

Mobile Browser Compatibility Guide

You can click through to John's blog entry on the matter or go directly to the source and look at Peter-Paul's work.

Tuesday, May 19, 2009

Autodesk Project Dragonfly

Monday, April 20, 2009

Mythbuntu 8.10 Scheduling Woes

The symptoms of the problem are that checking the schedule of upcoming recordings from the commandline returns nothing.

root@backend0:~# mythbackend --printsched

2009-04-20 23:23:55.207 Using runtime prefix = /usr

2009-04-20 23:23:55.208 Empty LocalHostName.

2009-04-20 23:23:55.208 Using localhost value of backend0

2009-04-20 23:23:55.806 Cannot find default UPnP backend

2009-04-20 23:23:55.811 New DB connection, total: 1

2009-04-20 23:23:55.814 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:23:55.815 Closing DB connection named 'DBManager0'

2009-04-20 23:23:55.815 Deleting UPnP client...

2009-04-20 23:23:56.490 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:23:56.491 New DB connection, total: 2

2009-04-20 23:23:56.491 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:23:56.492 Current Schema Version: 1214

2009-04-20 23:23:56.493 New DB DataDirect connection

2009-04-20 23:23:56.494 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:23:56.496 Connecting to backend server: 192.168.2.12:6543 (try 1 of 5)

2009-04-20 23:23:56.499 Using protocol version 40

Retrieving Schedule from Master backend.

--- print list start ---

Title - Subtitle Ch Station Day Start End S C I T N Pri

--- print list end ---

However, testing the scheduling functionality returns a list of all shows that would have been recorded.

root@backend0:~# mythbackend --testsched | more

2009-04-20 23:25:54.768 Using runtime prefix = /usr

2009-04-20 23:25:54.768 Empty LocalHostName.

2009-04-20 23:25:54.769 Using localhost value of backend0

2009-04-20 23:25:55.185 Cannot find default UPnP backend

2009-04-20 23:25:55.190 New DB connection, total: 1

2009-04-20 23:25:55.194 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:25:55.195 Closing DB connection named 'DBManager0'

2009-04-20 23:25:55.195 Deleting UPnP client...

2009-04-20 23:25:56.247 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:25:56.248 New DB connection, total: 2

2009-04-20 23:25:56.248 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:25:56.249 Current Schema Version: 1214

2009-04-20 23:25:56.250 New DB DataDirect connection

2009-04-20 23:25:56.251 Connected to database 'mythconverg' at host: localhost

Calculating Schedule from database.

Inputs, Card IDs, and Conflict info may be invalid if you have multiple tuners.

2009-04-20 23:25:56.317 Speculative scheduled 45 items in 0.1 = 0.01 match + 0.06 place

--- print list start ---

Title - Subtitle Ch Station Day Start End S C I T N Pri

Sesame Street - "The Cookie To 67.1 MPTHD 21 09:30-10:30 1 5 5 w 5 -2/0

Charlie Rose 67.1 MPTHD 21 12:35-13:32 1 5 5 C 5 0/0

Two and a Half Men - "Best H.O 13.1 PN-2 21 20:30-21:00 1 5 5 C 5 0/0

Fringe - "Bad Dreams" 45.1 FOX45 H 21 21:01-22:00 1 5 5 C 5 0/0

The Mentalist - "Paint It Red" 13.1 PN-2 21 22:00-23:00 1 5 5 C 5 0/0

.

.

.

Dollhouse - "Briar Rose" 45.1 FOX45 H 01 21:01-22:00 1 5 5 C 5 0/0

Boston Legal - "Smile" 2.1 PN-7 02 23:35-00:35 1 5 5 C 5 0/0

Jericho - "Reconstruction" 54.1 WNUV HD 03 18:30-19:30 1 5 5 C 5 0/0

Desperate Housewives - "Bargai 2.1 PN-7 03 21:00-22:01 1 5 5 C 5 0/0

--- print list end ---

This puzzled me to no end. Lots of posts online talk about ensuring your mythbackend IP isn't set to 127.0.0.1 and to redo the entire setup of tuners and lineups for the backend. I checked the former and wasn't interested in the latter. I even ran mysqlrepair on the mythconverg database just in case - I found nothing.

It turns out there's a way to increase logging for specific aspects of the MythTV backend. I modified the /etc/default/mythtv-backend file by uncommenting the EXTRA_ARGS variable and setting it to:

EXTRA_ARGS="--verbose schedule"

I restarted the MythTV backend while tailing /var/log/mythtv/mythbackend.log and found this little nugget:

2009-04-20 23:41:39.464 Using runtime prefix = /usr

2009-04-20 23:41:39.465 Empty LocalHostName.

2009-04-20 23:41:39.465 Using localhost value of backend0

2009-04-20 23:41:39.472 New DB connection, total: 1

2009-04-20 23:41:39.476 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:41:39.477 Closing DB connection named 'DBManager0'

2009-04-20 23:41:39.478 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:41:39.479 New DB connection, total: 2

2009-04-20 23:41:39.480 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:41:39.481 Current Schema Version: 1214

Starting up as the master server.

2009-04-20 23:41:40.494 HDHRChan(10114243/0), Error: device not found

2009-04-20 23:41:41.506 HDHRChan(10114243/1), Error: device not found

ERROR: no valid capture cards are defined in the database.

Perhaps you should read the installation instructions?

2009-04-20 23:41:41.513 New DB connection, total: 3

2009-04-20 23:41:41.514 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:41:42.725 Main::Registering HttpStatus Extension

2009-04-20 23:41:42.725 mythbackend version: 0.21.20080304-1 www.mythtv.org

2009-04-20 23:41:42.727 Enabled verbose msgs: important general schedule

2009-04-20 23:41:42.728 AutoExpire: CalcParams(): Max required Free Space: 1.0 GB w/freq: 15 min

2009-04-20 23:41:51.927 UPnpMedia: BuildMediaMap VIDEO scan starting in :/var/lib/mythtv/videos:

2009-04-20 23:41:54.489 UPnpMedia: BuildMediaMap Done. Found 8257 objects

2009-04-20 23:43:01.513 AutoExpire: CalcParams(): Max required Free Space: 1.0 GB w/freq: 15 min

It turns out my HDHomerun wasn't being properly recognized. The lights seemed to indicate it was on the network but I power cycled it just in case. A restart of the MythTV backend revealed:

2009-04-20 23:43:18.272 Using runtime prefix = /usr

2009-04-20 23:43:18.273 Empty LocalHostName.

2009-04-20 23:43:18.273 Using localhost value of backend0

2009-04-20 23:43:18.279 New DB connection, total: 1

2009-04-20 23:43:18.283 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:43:18.284 Closing DB connection named 'DBManager0'

2009-04-20 23:43:18.285 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:43:18.286 New DB connection, total: 2

2009-04-20 23:43:18.286 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:43:18.287 Current Schema Version: 1214

Starting up as the master server.

2009-04-20 23:43:18.293 HDHRChan(10114243/0): device found at address 192.168.2.13

2009-04-20 23:43:18.295 New DB connection, total: 3

2009-04-20 23:43:18.295 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:43:18.366 HDHRChan(10114243/1): device found at address 192.168.2.13

2009-04-20 23:43:18.428 New DB scheduler connection

2009-04-20 23:43:18.428 Connected to database 'mythconverg' at host: localhost

2009-04-20 23:43:19.637 Main::Registering HttpStatus Extension

2009-04-20 23:43:19.637 mythbackend version: 0.21.20080304-1 www.mythtv.org

2009-04-20 23:43:19.637 Enabled verbose msgs: important general schedule

2009-04-20 23:43:19.638 AutoExpire: CalcParams(): Max required Free Space: 1.0 GB w/freq: 15 min

2009-04-20 23:43:21.431 Reschedule requested for id -1.

Query 0: /record.search = :NRST AND program.manualid = 0 AND program.title = record.title

2009-04-20 23:43:21.433 |-- Start DB Query 0...

2009-04-20 23:43:21.438 |-- -1 results in 0.004817 sec.

2009-04-20 23:43:21.438 +-- Done.

2009-04-20 23:43:21.440 BuildWorkList...

2009-04-20 23:43:21.440 AddNewRecords...

2009-04-20 23:43:21.451 |-- Start DB Query...

2009-04-20 23:43:21.478 |-- 160 results in 0.02642 sec. Processing...

2009-04-20 23:43:21.500 +-- Cleanup...

2009-04-20 23:43:21.501 AddNotListed...

2009-04-20 23:43:21.502 |-- Start DB Query...

2009-04-20 23:43:21.503 |-- 0 results in 0.000614 sec. Processing...

2009-04-20 23:43:21.503 Sort by time...

2009-04-20 23:43:21.503 PruneOverlaps...

2009-04-20 23:43:21.504 Sort by priority...

2009-04-20 23:43:21.504 BuildListMaps...

2009-04-20 23:43:21.505 SchedNewRecords...

2009-04-20 23:43:21.505 Scheduling:

+Charlie Rose 67.1 MPTHD 21 12:35-13:32 1 5 5 C 5 0/0

+Two and a Half Men - "Best H.O 13.1 PN-2 21 20:30-21:00 1 5 5 C 5 0/0

+Fringe - "Bad Dreams" 45.1 FOX45 H 21 21:01-22:00 1 5 5 C 5 0/0

+The Mentalist - "Paint It Red" 13.1 PN-2 21 22:00-23:00 1 5 5 C 5 0/0

+Charlie Rose 67.1 MPTHD 22 12:35-13:32 1 5 5 C 5 0/0

+Lie to Me - "Undercover" 45.1 FOX45 H 22 20:00-21:00 1 5 5 C 5 0/0

.

.

.

+Dollhouse - "Briar Rose" 45.1 FOX45 H 01 21:01-22:00 1 5 5 C 5 0/0

+Boston Legal - "Smile" 2.1 PN-7 02 23:35-00:35 1 5 5 C 5 0/0

+Jericho - "Reconstruction" 54.1 WNUV HD 03 18:30-19:30 1 5 5 C 5 0/0

+Desperate Housewives - "Bargai 2.1 PN-7 03 21:00-22:01 1 5 5 C 5 0/0

+The Big Comfy Couch - "Gimme G 67.1 MPTHD 27 05:00-05:30 1 5 5 C 5 -1/0

+Sesame Street - "The Cookie To 67.1 MPTHD 21 09:30-10:30 1 5 5 w 5 -2/0

+Sesame Street - "Firefly Show" 67.1 MPTHD 27 09:30-10:30 1 5 5 w 5 -2/0

2009-04-20 23:43:21.549 SchedPreserveLiveTV...

2009-04-20 23:43:21.549 ClearListMaps...

2009-04-20 23:43:21.550 Sort by time...

2009-04-20 23:43:21.550 PruneRedundants...

2009-04-20 23:43:21.551 Sort by time...

2009-04-20 23:43:21.552 ClearWorkList...

2009-04-20 23:43:21.554 Scheduler: Update next_record for 28

2009-04-20 23:43:21.554 Scheduler: Update next_record for 36

--- print list start ---

Title - Subtitle Ch Station Day Start End S C I T N Pri

Sesame Street - "The Cookie To 67.1 MPTHD 21 09:30-10:30 1 5 5 w 5 -2/0

Charlie Rose 67.1 MPTHD 21 12:35-13:32 1 5 5 C 5 0/0

Two and a Half Men - "Best H.O 13.1 PN-2 21 20:30-21:00 1 5 5 C 5 0/0

Fringe - "Bad Dreams" 45.1 FOX45 H 21 21:01-22:00 1 5 5 C 5 0/0

The Mentalist - "Paint It Red" 13.1 PN-2 21 22:00-23:00 1 5 5 C 5 0/0

.

.

.

Dollhouse - "Briar Rose" 45.1 FOX45 H 01 21:01-22:00 1 5 5 C 5 0/0

Boston Legal - "Smile" 2.1 PN-7 02 23:35-00:35 1 5 5 C 5 0/0

Jericho - "Reconstruction" 54.1 WNUV HD 03 18:30-19:30 1 5 5 C 5 0/0

Desperate Housewives - "Bargai 2.1 PN-7 03 21:00-22:01 1 5 5 C 5 0/0

--- print list end ---

2009-04-20 23:43:21.631 Scheduled 45 items in 0.1 = 0.01 match + 0.11 place

2009-04-20 23:43:21.634 Seem to be woken up by USER

2009-04-20 23:43:28.830 UPnpMedia: BuildMediaMap VIDEO scan starting in :/var/lib/mythtv/videos:

2009-04-20 23:43:31.404 UPnpMedia: BuildMediaMap Done. Found 8257 objects

2009-04-20 23:44:38.430 AutoExpire: CalcParams(): Max required Free Space: 1.0 GB w/freq: 15 min

In other words, sweet, sweet victory :) While the whole teardown/setup of the backend would probably have caught the tuner issue as well I find checking the tuners to be an easier solution with fewer hair pulls required :)

Thursday, April 9, 2009

Implementing a Web Downloader in bash

Thursday, April 2, 2009

The Digg Bar Bookmarklet

Enjoy!

Friday, March 27, 2009

Sprint EVDO Mobile Broadband on Ubuntu

I couldn't resist one last Google search, however, and it was worth it :) While the box for the device (a Sierra Wireless 598U) doesn't mention Linux by name, Sprint provides a full installation guide for Ubuntu (Fedora and others are there too). I was very pleasantly surprised. Everything just works!

Thursday, March 26, 2009

Multi-Column Database Indices in Sqlite3 and Ruby on Rails

In your database migration,

add_index :contests, [:category_id, :name], :unique => true

In your data model,

validates_uniqueness_of :name, :scope => :category_idThat last line in the model is key. Syntax similar to the add_index line above won't work when using the validates_uniqueness_of validator.

Saturday, March 21, 2009

The Magic SysRq Key - Commandline Edition

In any case, after manually failing the partition that was causing problems with the RAID volume I still wasn't able to bounce the box since the RAID volume won't stop or sync and actually halted the shutdown process. I thought about the magic SysRq but realized I was ssh'ed in and that won't work. For some reason I didn't think of searching for a way too invoke the magic SysRq processes from the commandline. I looked for a way to force a shutdown and found this blog. It worked like a charm!

Intriguingly enough, when the host came back up the RAID volume remounted and both partitions that formed the RAID volume were fine as reported by mdadm. What's more all seemed well with the mounted volume and I was able to read/write to it just fine. I'll be keeping an eye on that for a bit.

Thursday, March 19, 2009

A Scientific View on Database Indices

It seems that either sqlite3 or RoR 2.0.5 doesn't support multi-column indices on a table. One of those two converts a single two-column index into 2 one-column indices - not quite the behavior I wanted. I'll try to switch to PostgreSQL to see if the behavior re-surfaces.

While I was scouring Google for sqlite3's limitations, however, I came across this interesting article that details the kind of scientific analysis that should be conducted on your data to determine the order of columns in a multi-column index. Very informative.

Monday, February 23, 2009

libttf.so.2 for package: nagios

I don't know what they all had done wrong but I finally figured out what I'd done wrong to deserve this error :). I'd made the mistake of installing the rpmforge-release package meant for CentOS 4 instead of CentOS 5. This meant I was trying to install the el4 version of the nagios-3.0.6 package which apparently had this dependency. Before encountering this problem, however, I'd switched to the CentOS 5 repository so I didn't make the connection until I decided to read every letter of a yum install report:

[root@services ~]# yum install nagiosI then realized why this problem might've fixed itself the next day - the yum cache might've become stale enough to be rebuilt. At that time yum would realize the el4 repository no longer existed and would remove it from the cache and replace it with the information about the el5 repository. When running a yum install nagios against the el5 repository, we get much more pleasant output:

Loading "priorities" plugin

Loading "fastestmirror" plugin

Loading mirror speeds from cached hostfile

* epel: mirror.hiwaay.net

* rpmforge: apt.sw.be

* base: mirror.trouble-free.net

* updates: centos.mirror.nac.net

* centosplus: centos.mirror.nac.net

* addons: mirror.nyi.net

* extras: centos.mirror.nac.net

0 packages excluded due to repository priority protections

Setting up Install Process

Parsing package install arguments

Resolving Dependencies

--> Running transaction check

---> Package nagios.i386 0:3.0.6-1.el4.rf set to be updated

--> Processing Dependency: libttf.so.2 for package: nagios

--> Finished Dependency Resolution

Error: Missing Dependency: libttf.so.2 is needed by package nagios

[root@services ~]# yum install nagiosThe moral of the story? After changing anything in your yum repositories, make sure to run:

Loading "priorities" plugin

Loading "fastestmirror" plugin

Loading mirror speeds from cached hostfile

* epel: mirror.hiwaay.net

* rpmforge: apt.sw.be

* base: mirror.trouble-free.net

* updates: centos.mirror.nac.net

* centosplus: centos.mirror.nac.net

* addons: mirror.nyi.net

* extras: centos.mirror.nac.net

0 packages excluded due to repository priority protections

Setting up Install Process

Parsing package install arguments

Resolving Dependencies

--> Running transaction check

---> Package nagios.i386 0:3.0.6-1.el5.rf set to be updated

--> Finished Dependency Resolution

Dependencies Resolved

=============================================================================

Package Arch Version Repository Size

=============================================================================

Installing:

nagios i386 3.0.6-1.el5.rf rpmforge 3.6 M

Transaction Summary

=============================================================================

Install 1 Package(s)

Update 0 Package(s)

Remove 0 Package(s)

Total download size: 3.6 M

Is this ok [y/N]: y

yum clean all

yum makecache

Friday, February 6, 2009

Upgrading Ubuntu 8.10 (Intrepid Ibex) to KDE 4.2

Long story short, I ended up following this site on upgrading to 4.2. I ran the apt-get while I was away from my computer and when I returned, lo and behold, instead of finding a nice KDE 4.2 package install log I found an error with installing some packages. I did what anyone else would do - I didn't believe my eyes and re-ran the command hoping to brute force my way through this :) Here's what I saw:

Reading package lists... DoneAfter much searching and trying tools like synaptic and aptitude I went for the sniper rifle: dpkg. I removed the offending packages kde-window-manager and kdebase-workspace-data. Now my apt-get is running smoothly in the background. Let's hope it finished upgrading me to KDE 4.2 :)

Building dependency tree

Reading state information... Done

Correcting dependencies... Done

The following packages were automatically installed and are no longer required:

krita-data kword-data koshell kthesaurus kpresenter krita kugar kword ksysguardd kchart karbon compizconfig-backend-kconfig

kspread libpoppler-qt2 kplato libwv2-1c2 kexi libpqxx-2.6.9ldbl kpresenter-data

Use 'apt-get autoremove' to remove them.

The following extra packages will be installed:

kde-window-manager kdebase-workspace-data libplasma3

The following packages will be REMOVED:

libplasma2

The following NEW packages will be installed:

libplasma3

The following packages will be upgraded:

kde-window-manager kdebase-workspace-data

2 upgraded, 1 newly installed, 1 to remove and 73 not upgraded.

6 not fully installed or removed.

Need to get 0B/10.0MB of archives.

After this operation, 12.3kB of additional disk space will be used.

Do you want to continue [Y/n]?

(Reading database ... 252512 files and directories currently installed.)

Preparing to replace kde-window-manager 4:4.1.3-0ubuntu1~intrepid1 (using .../kde-window-manager_4%3a4.2.0-0ubuntu1~intrepid1~ppa7_i386.deb) ...

Unpacking replacement kde-window-manager ...

dpkg: error processing /var/cache/apt/archives/kde-window-manager_4%3a4.2.0-0ubuntu1~intrepid1~ppa7_i386.deb (--unpack):

trying to overwrite `/usr/share/kde4/apps/kconf_update/plasma-add-shortcut-to-menu.upd', which is also in package kdebase-workspace-data

dpkg-deb: subprocess paste killed by signal (Broken pipe)

Errors were encountered while processing:

/var/cache/apt/archives/kde-window-manager_4%3a4.2.0-0ubuntu1~intrepid1~ppa7_i386.deb

E: Sub-process /usr/bin/dpkg returned an error code (1)

Wednesday, February 4, 2009

KMail Integration with Microsoft Exchange

I haven't gotten Calendar integration working just yet but I haven't put a lot of time into it yet. This page on the KDE Wiki explains how to use webdav to perform one-way syncs against an Exchange server using a plugin for KOrganizer.

Edit: Even brought it to my attention that the KDE Wiki link is no longer working. It seems the KDE wiki has changed structure since I was last on it. I found Jason Kasper's blog, however, where he's talking about a ruby-based solution to the 1-way sync between an Exchange OWA server and KOrganizer.

Friday, January 30, 2009

Net-SNMP and HP/UX B10.20

Thursday, January 29, 2009

Solaris 10 and Net-SNMP

/etc/init.d/init.sma stop

This stops and disables the System Management Agent as per this section in the Solaris System Management Agent Administration Guide on Sun's site.

With the default SNMP daemon dead and disabled, you can start up the Net-SNMP daemon using its own init script.

Enjoy!

Sunday, January 25, 2009

Comcast and the Battle of the SMTP

After an email that I sent to my gmail account didn't show up even after 5 minutes, I decided to look at my mail server to see if it had recorded anything. I pulled up the admin console for my newly installed instance of Zimbra and saw about 400 deferred emails on the server status page. I was quite surprised that all those people hadn't complained. Regardless, I selected all the emails and re-queued them for delivery. Each and every one of them failed again. Errors ranged from connectivity errors to my ISP's mail relay (smtp.comcast.net) to other weird errors once my mail server connected to the relay host. Finally I tried my tried and true method - telnetting to port 25 on smtp.comcast.net and manually going through a mail session from helo to the final period. I couldn't even connect to port 25 on the mail relay server!

It turns out Comcast had recently removed access to port 25 on its mail relay server where "recently" refers to November 28th. I'm not entirely certain why I didn't see any issues even at the end of December and beginning of January when I launched the application. In any case, not only had they switched to using port 587 instead of 25, they were also requiring authentication using the username/password associated with your Comcast account to even send email via this relay.

Fair enough. Now to configure Zimbra to support an authenticated mail relay. Luckily I got help from the Zimbra Wiki. Following the instructions to the letter (except for substituting hostnames, usernames and passwords appropriately) I was able to get mail working again.

Now I just have to wait for 400 emails to get delivered 3 at a time - or whatever the absurdly low limit is for the Comcast mail relay.

Friday, January 16, 2009

Paypal IPN and mc_fee

- You put a form (hidden or with bits visible) that POSTs data to Paypal

- This brings the user to Paypal where they can pay using either a credit card or their Paypal account

- As part of processing the user's payment, Paypal takes key information about the user's payment e.g. transaction id, amount, and other sundry details and POSTs them to a custom instant payment notification URL (as it happens to be called) on your site

- This URL is hopefully hosts a script or other form of code that can pick up all the POSTed IPN variables and POSTs back the information with one extra variable to validate the information

- Once this POST back to Paypal returns with a success status the application can record the user's payment and credit them appropriately.

Tuesday, January 13, 2009

Recovering Virtual Machine Templates after Re-Installing VMware Virtual Center

The Easy Way

The first solution relies on the ability of VMware Infrastructure Client to convert a Template into a Virtual Machine and back. Since all virtual machines stored on ESX servers managed by a new instance of Virtual Center are automatically recognized and properly populated in the Virtual Infrastrucure Client, make sure you name every template to reflect that it's a template by e.g. putting the word "Template" somewhere in its name, etc.

- Switch your Virtual Infrastructure Client to display both virtual machines and templates by using the keyboard shortcut Ctrl-Shift-V or by clicking on dropdown associated with the large "Inventory" drop down in the top toolbar and choosing "Virtual Machines and Templates".

- Right-click on each template and choose "Convert to Virtual Machine".

- Once you've converted all your templates to virtual machines you can re-install Virtual Center.

- Now re-connect to the new instance of Virtual Center and you'll see all your templates as virtual machines.

- Right-click on each virtual machine that used to be a template (see where the naming comes in?) and click on "Convert to Template"

The Elegant Way

This method doesn't require you to convert your templates to virtual machines and back. Just re-install your instance of Virtual Center and you will notice all your templates disappear the next time you connect to the new Virtual Center using your Virtual Infrastructure Client. Follow these steps to bring them back:

- Switch your Virtual Infrastructure Client to display your datastores by using the keyboard shortcut Ctrl-Shift-D or by clicking on dropdown associated with the large "Inventory" drop down in the top toolbar and choosing "Datastores".

- Click on a group and then a datastore and in the right detail panel click on "Browse Datastore...". This should bring up a window that will allow you to browse that datastore. Each virtual machine and template will have its own directory in this datastore.

- Click on a directory named after one of your templates. The right panel will change to show you all the files in that directory.

- Right click on the only file of type "Template VM" and click on "Add to Inventory".

- In the dialog box that pops up, give the template a name (presumably the same name as it currently has) and choose its location (presumably the same group it's in now).

- In the next dialog box choose the host to store the template on. This should be the same ESX server whose datastore you're browsing.

- After validating the template/vm it will present the last dialog of the wizard with the details of your "VM". Click "Finish" and you're done.

- Close the data store browser and change your Virtual Infrastructure Client to "Virtual Machines and Templates" mode using Ctrl-Shift-V or by clicking on Inventory and choosing "Virtual Machines and Templates" and you'll see the template back in the inventory!

Friday, January 9, 2009

Upgrading from Plone 2.0.4 to 3.1.6

It turns out that upgrading isn't as simple as copying your Data.fs file to a new Zope instance running the latest versions of Zope and Plone. Go figure :) After much trying then getting frustrated and searching I came across this blog where the author seems to have come to the same conclusion as I did: the only way to do the upgrade I desired was to upgrade Zope and Plone in lockstep. This means I'll have to upgrade Plone to 2.1, then Zope to 2.8, then Plone to 2.5, then Zope to 2.9, etc.

Luckily the Plone site has good documentation about the incremental upgrades and the whole process should be possible. Now all I have to do is go through the incremental upgrades on a virtual machine so I end up with a more-or-less pristine copy of the site for deployment on the new server.

Wednesday, January 7, 2009

About this blog

The idea is to have a semi-irregular (is that a word?) blog where I can record things I've learned in my profession of choice: IT, software engineering or "computers". I can then search my own blog to find solutions to problems I've encountered in the past. One possible side-effect of a blog such as this would also be that future employers can read through it to get a feeling for what I've done in the past; of course depending on the kinds of problems I've successfully solved that could be a bad thing too :)

In any case, I'm just kicking this blog off so I have some place for a brain dump when I need to. If you're not me, you're probably lost :) If you're not lost then welcome!